-

Fil d’actualités

- EXPLORER

-

Pages

-

Groupes

-

Evènements

-

Reels

-

Blogs

-

Offres

-

Emplois

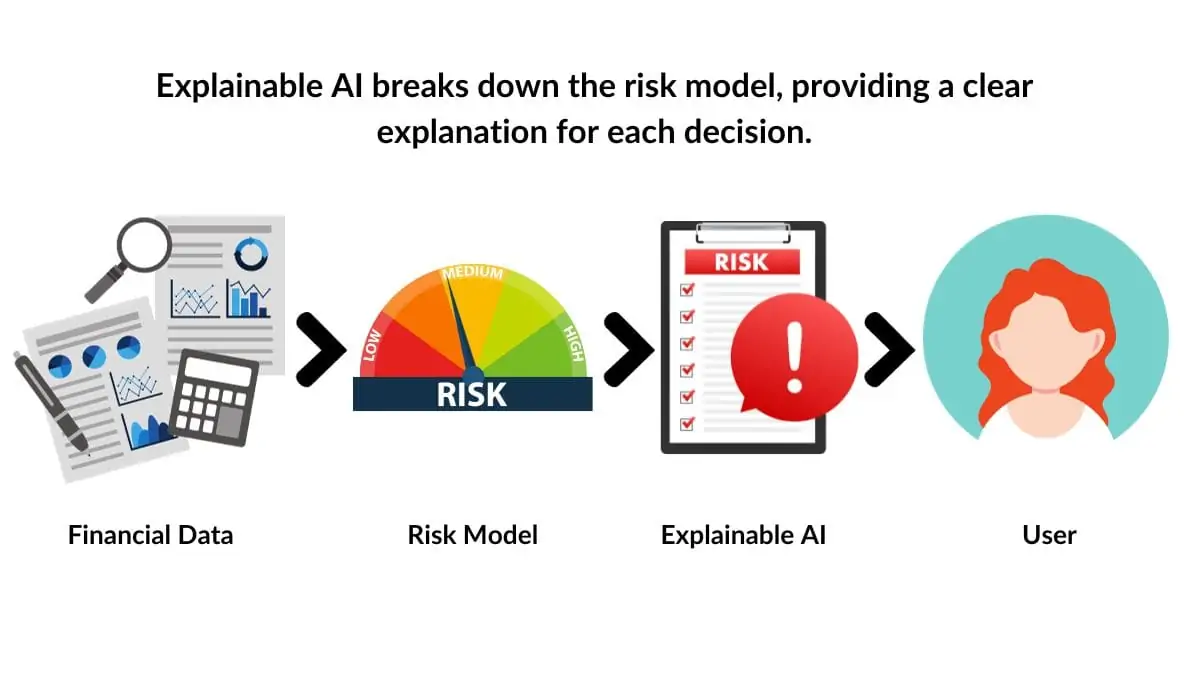

Explainable AI Market Size, Share, and Technology Advancements

The explainable AI market is experiencing rapid expansion as regulatory requirements and organizational risk management practices increasingly mandate AI transparency and accountability. Explainable Ai Market Growth reflects the convergence of regulatory pressure, ethical considerations, and practical requirements for trustworthy AI systems across industries. The Explainable Ai Market size is projected to grow USD 29.98 Billion by 2035, exhibiting a CAGR of 16.8% during the forecast period 2025-2035. Growth drivers include expanding AI adoption, increasing regulatory scrutiny, and growing awareness of AI risks requiring explanation and oversight capabilities. Market expansion creates opportunities for technology vendors, consulting firms, and organizations developing internal explainability capabilities.

Regulatory developments worldwide are establishing requirements for AI transparency and explanation that drive explainability adoption. European Union AI Act establishes comprehensive requirements for high-risk AI systems including transparency and explanation obligations. United States regulatory agencies including the Federal Reserve and OCC require model risk management practices encompassing explainability. Financial services regulations globally demand that credit decisions and other consequential determinations be explainable to affected individuals.

Enterprise AI adoption acceleration creates substantial demand for explainability capabilities accompanying production deployments. Organizations are moving beyond experimental AI projects to operational systems affecting customers, employees, and business outcomes. Production AI systems require ongoing monitoring and explanation capabilities for effective governance. Stakeholder expectations for AI accountability increasingly influence deployment decisions and vendor selection.

High-stakes application domains including healthcare, criminal justice, and financial services drive intensive explainability requirements. Medical diagnosis and treatment recommendation systems must provide understandable reasoning for clinical acceptance. Criminal justice applications face scrutiny regarding bias and fairness requiring transparent decision-making. Financial services applications must explain credit, insurance, and investment decisions to regulators and customers.

Competitive advantage motivations complement compliance requirements as organizations recognize explainability's business value. Customer trust improves when AI-powered services provide understandable explanations for recommendations and decisions. Model improvement accelerates when developers can understand and address model weaknesses. Differentiation opportunities exist for organizations demonstrating responsible AI practices through transparency.

Top Trending Reports -

Persistent Threat Detection System (PTDS) Market Growth

- Art

- Causes

- Crafts

- Dance

- Drinks

- Film

- Fitness

- Food

- Jeux

- Gardening

- Health

- Accueil

- Literature

- Music

- Networking

- Autre

- Party

- Religion

- Shopping

- Sports

- Theater

- Wellness